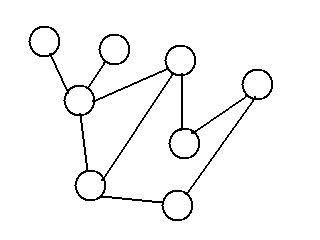

1) How do you create a grammar that can sufficiently understand context? The de-facto way of understanding grammar is by envisioning it as a tree (for more technical folk, this is a phrase-structure grammar, give or take a few technicalities.):

[Source: http://july.fixedreference.org/en/20040724/wikipedia/Phrase_structure_rules]

This isn't just helpful for understanding the meaning and structure of a sentence but also for understanding more broad ideas such as an entire story. Now, I should make clear that grammar only deals with the syntax of a sentence and not its semantics. That means that it's not dealing with what the sentence means (see above) but only with the structure of the sentence.

The essence of these grammars is context. The context of the rest of the sentence tells us what words can come next in a sentence. If the sentence under construction is "Colorless green ideas sleep ____", the blank spot can't be filled with a noun; that doesn't make any sense. At the end of the day, however, these simple hierarchical phrase-structure grammars are not powerful enough to deal with the full expressive power of human language. They also don't deal with the semantics of the sentence; there's no grammar out there that can tell us the above sentence is meaningless and doesn't make any sense.

The last statement I made was loaded for a number of reasons; for those who spotted that, I'll put the question more properly: teaching a computer how to make grammatical sentences is not the same as teaching it how to communicate through natural language. I don't believe that any grammar like the one above could be used to comprehend semantics.

2) So, let's talk a little bit about semantics. The meaning of a sentence and the meaning of a story are very similar in two particular places:

First, just as a story (or a part of a story) has multiple meanings, so do sentences or parts of a sentence; not only do words and phrasings have multiple definitions ("let's talk about sex with Doug Funny" vs. "let's talk about sex with Doug Funny"), but there's also an inherent ambiguity to pronouns such as "he", "she" or "they" that can only be given definitions in the context of what the person is saying. A good analogue to the latter part would be how a story may reference that "Elvis was playing on the jukebox" and so we use what we know of Elvis in the real world to fill in the blank. The range of interpretations is changed by the surrounding context, with new interpretations added and old interpretations removed.

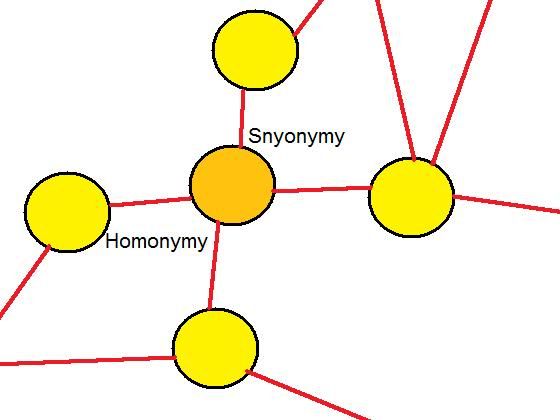

But there is also a corollary: just as we can use many stories to express the same thing, we can also choose from multiple words or sentences to say the same thing. Respectively, these two phenomena are called homonymy and synonymy

Now consider that synonyms create a sort of redundancy* for figuring out what a sentence means. If a word has multiple meanings then using synonymous word or description for the same meaning can make clear what the writer was getting at. Consider "Roger, who loved carrots, was a real jackrabbit" and "Roger, who didn't always show great judgment at parties, was a real jackrabbit." In each one, the surrounding context is narrowed down by the use of synonyms. It's also worth noting that context can be seen as a way of creating redundancy (or creating more ambiguity in other cases.)

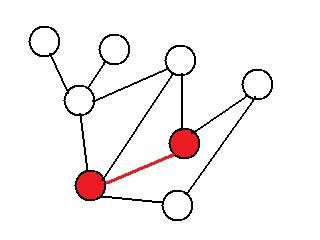

3) Associativity plays a huge role in understanding things like narratives and semantics in general. The following is an illustration of how ideas, memory and everything else fundamentally works in the brain:

Your Brain (artist's rendition)

Your Brain Making an Association!

The neocortex, the area of the brain that makes us "human", is fundamentally a network of neurons that connect with one another through simple rules. There may be different parts that neurologists have identified, but none of these discoveries seem to constitute any strict unbreakable rules; different areas of the brain can fulfill the functions of other areas in cases of brain damage, a case of plasticity (a.k.a. redundancy.) To get at the importance of this, let's consider two phenomena:

Ideas: Consider the (masterful) pictures that I drew above. Think of each of the circles as a different idea/concept/description/etc, such as cell-phones or Pride and Prejudice. Now, look at the second illustration and suppose that these two ideas had something in common with each other--you notice this and bam!, they're connected! This is a huge generalization, of course; the brain is packed with quadrillions of neurons and each idea would be something more like a cluster of connected neurons, but the same basic idea is at play. Let's now move on to something more relevant...

Memory: For me, the concepts of memory and narrative cannot be separated. Everything that has "happened" to us is what we remember. I once had an argument with a friend where I insisted that dreams were just a giant dump of data from the brain and that the only reason we remember them as stories of any kind is because we make sense of that data after the fact; that that's the way our memory works. He said in return "but then you're just going into the age old question of what's experience and what's memory."

It should be clear this point that our memories do not work as some serial recording device like a computer's hard-drive or a video-casette; nature has given us a far more sophisticated device. Memory is a network of associations between sensations, ideas, stories and any other kind of experience that one can imagine—thus the reason for the insufferable amount of references to Marcel Proust's eating of the Madeline cookie in Remembrance of Things Past. We recall through association, something reminds us of something else.

4) Associativity shares much in common with the basic axioms of modern semiotics**. Consider that signs are not defined in absolute terms but assigned in relative terms. Let me try to explain this in detail.

Imagine for now that when I write a word like /this/, I'm talking about the concept. So for example, the word "automobile" is representative of an /automobile/. This is where semiotics started at when Saussure coined it; each word was an arbitrary token used to reference some object in the real world, so for example:

"Tree" --> /tree/

"Blue" --> /blue/

"Dog" --> /dog/

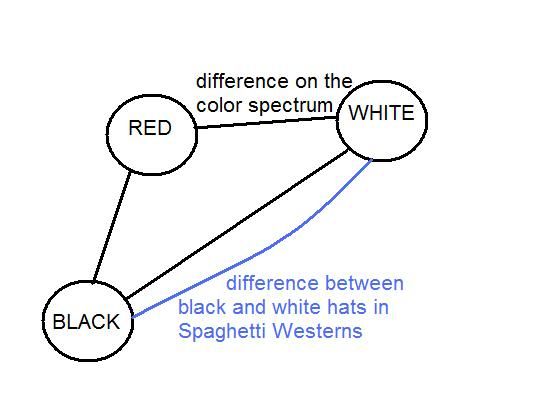

But now consider the difficulty of defining something in terms of absolutes. You can say that you like the color blue better than the color red, but you can't say that you like the color blue better than a tree. There's no comparison between a color and a plant. So instead, the idea of a sign system was coined, which was the idea of defining things in relation to each other so they could be compared. So red and blue belonged to the system of colors and trees and roses belonged to the system of plants. But, any one word or concept can belong to multiple systems. For example, if you're talking about what to decorate your garden with, you can say "I like trees better than garden gnomes." whereas you couldn't if you were having a discussion about plants.

I'm going to say for the record that this is all a simplification for many reasons, but I digress. Consider how this is similar to the concepts of honomyny and synonymy. The color blue can be referenced in multiple contexts. Conversely, we can think of the color blue as having multiple definitions; it can mean a color in the rainbow, or it can be part of a list of visual motifs in a movie. In order to see it this way, however, we have to accept that an idea can only be defined in opposition to other ideas. Let's take a look:

Note that while there are multiple contexts for the colors black and white, each of these contexts is defined by talking about how they are different from other things.*** That's how a sign system is formed; there is nothing explicitly defined. We can define a system of colors because of the comparable differences we see between them; same with a system of hats in Spaghetti Westerns. This is the concept that the French structuralists knew as difference. Meaning is relative.

5) So what was this all about? Where's the new heuristic?

Note the big glaring similarity between my semiotic diagram and the diagram of the brain. Did you see it? They're both networks. A sign system is created by the ability to associate ideas with one another, which requires that they have some similarity in form. One might say that in order to contrast two ideas, you first have to compare them (bonus points to those who can find a comparison between cell-phones and Pride and Prejudice.)

Right now we've been talking about the similarity in function between a semiotic network and a neural network. Now I'd like to explain why I don't think phrase-structure grammars are insufficient for understanding semantic context. From a computational standpoint, they're quite beautiful; the weakest phrase-structure grammar, a context free grammar, is itself a vast improvement over the most primitive model of computing, known as a finite state machine. But one thing that the concept of phrase-structure grammars doesn't seem to take into account very well is the concept of redundancy, which we saw was crucial for understanding how context can be narrowed down by having multiple words available as synonyms for the same concept. In simple terms, they do not have a built in mechanism for dealing with ambiguity.

As we know, using many synonyms can narrow down the range of interpretations. But here's the question that many would have: "why should words have multiple meanings? Isn't that silly and just going to confuse us?" Yes, maybe, but it also makes it much, much, much easier to describe something, because we can find a word with the right connotations and then add in redundancy in order to weed out unwanted interpretations (again, I understand this is a simplification.) In essence, when it comes to the meanings of words, sentences, stories or anything else (this concept applies to all of these things), we get something that looks like this:

We should be understanding language as a network that is capable of combining many different ideas rapidly and unpredictably but also capable of creating redundancy that allows precise and novel things to be expressed without complete confusion. Yeah, that's it.

I may post a follow up to this essay that more fully investigates the implications of this model. For now, I've actually gotten tired of writing this post. I much prefer talking about Dumbo on a winter afternoon and the like.

---------------------------------------------------------

*For more technical types, this is a more precise definition of redundancy.

**I don't know entirely who coined which concepts in semiotics; but the semiotic theory that I talk about is largely taken from Umberto Eco's semiotic theory.

***For those who are interested in questions about meta-languages, this heuristic may come to explain away a lot of my problems with it. One of the problems of semiotics is that we need a meta-language to evaluate sign systems. Notice, however, that in defining black and white in opposition to each other in terms of hats and colors in the spectrum, there was a difference in form. One sign system included red whereas the other one didn't, thus making them distinctly different sign systems irrespective of any explicit links.

That is to say, that if both systems solely included black and white, they would be the exact same sign system. It may actually be a little more complicated in that no sign system should be made up of a set of nodes that is a subset of another sign system's set of nodes, but I think that the same basic principle is at play here.

No comments:

Post a Comment