A traffic jam at the intersection of interactivity, aesthetics, epistemology, and semiotics

Tuesday, November 15, 2011

The Entropy of Morality

Tuesday, November 8, 2011

Paradigms vs. Narratives

Friday, September 30, 2011

Command and Control

Monday, September 26, 2011

Truth

Tuesday, September 13, 2011

Meta-Entrepreneurship

Wednesday, August 17, 2011

Erudition and Innovation

My confession is that I'm writing this post in preparation for a lecture I'll be giving in a few days to the AIESEC conference. I figured what better way to help jog my mind than present the general gist of what I'm thinking to my viewers.

So without further adieu, what will I be talking about on this post? Something that I think hasn't been paid close enough attention: the

My story is one that some readers of this blog probably know, but if there are any new readers out there, perhaps not. It was half a year ago that I found myself signing on as the co-founder of Fear of Software, and only a couple of months ago that I quit my day job as my initiation into becoming a fully funded entrepreneur. In that time, a lot has happened as I gained erratic but valuable experience and learned from the wisdom of my co-founder Nick LaRacuente, who was wise enough to get me out of the misguided logic of thinking "I'll just build it and they will come" or expecting to have a 2 year development cycle without any kind of intermediate prototyping. And yet I can't say much about this sort of a thing because with this startup, the story is really only in its opening chapter; the most shocking, difficult and serendipitous episodes have yet to arrive.

On the other hand, there's a whole lot that led to my standing right here (or in this case; sitting in front of this computer.) My story begins, well, probably from when I was 5 or 6, but zooming forward a bit... one could safely begin in high school. In middle school, I taught myself how to program and started making video games. Most of the products were over-ambitious, but they were always fun and once in a while I completed something exceedingly simple. I remember specifically, however, in my first year of high school coming across an editorial in Game Developer magazine, which I had a free subscription to, about the potential for games to be a legitimate art form on the level of cinema, books or even fine art. The idea hit me very hard; I was your average 14 year old, mostly interested in video games, coca-cola and generally trying as best I could to look cool; but this idea for some reason was just like a shot to the head; I couldn't shake it!

Unfortunately, I didn't always have my stuff together in high school, so my projects generally got a running start and fizzled out just as fast, but something else was going on at the same time. I found myself for the first time extremely interested in literature; I started reading novels all of the time, even as it interfered with my schoolwork; for a while I even thought that I wanted to become a novelist and writing fiction of my own. Film and music also became more interesting to me; before then, I pretty much just watched whatever Hollywood blockbusters looked sufficiently entertaining and listened to whatever was loud enough to ensure hearing loss. At one point I even found myself fortunate enough to collaborate with several artists in my high school on a graphic novel; a project that actually went surprisingly well--it was certainly childish, with all sorts of elves and demons reminiscent of Lord of the Rings with a twist of Saturday-morning anime; but interspersed with poems and philosophical digressions (but don't get me wrong, it wasn't particularly good; this was high school!)

Life was actually pretty interesting in high school. Not much got done, at least not on the surface, but a lot was brewing. I still remember all of the afternoons I frittered away with friends hanging around nearby diners and walking the streets of Manhattan; but I digress, you don't want to hear that mushy stuff. But my point was something was going on in all that time; metamorphosis is never as straightforward as one things. Soon afterwards, I entered college; and luckily, a college where I'd have the same good fate of meeting friends that would help me develop further; there's another digression I need to take—good friends are important, they'll be the ones who enrich your experiences and lead you to your greatest ideas. This counts double for entrepreneurship, which is about people, your customers; not just the abstract ideas that one can go on for pages about in a novel or express in a piece of music.

Despite some initial confusion as to what I wanted to do with my time in college, I found myself as a double major in English and Computer Science; English because I had always had an interest in literature and found myself enchanted with the study of books when I tentatively took a class on John Milton and fell in love with Paradise Lost—so dense with potential criticism that I read the entire thing at a rate of about 3 pages per minute, which admittedly left me a stressed out mess as I never had enough time to get my work done and barely passed my all-important math class.

But along the way I also found a few books on my own that opened my ideas to the process by which ideas are generated. In freshman year, I came across a book by the esoteric but highly renowned game designer Chris Crawford*, which rekindled my dying interest in game design. Later, in junior year, I came across The Black Swan by Nassim Taleb—which if you read this blog (or know me in person), you know that I never shut up about it.

It's writers like these (a list of which would amount to some light name-dropping) that I hope to pay homage to in this post by using their ideas to explain the creative process and get across the simultaneously childishly erratic and gut-wrenchingly rigorous nature of innovation.

These writers, namely Crawford, left me with a new sense of purpose in my endeavors as well. I dreamed of a new kind of video game that would fit my changing self; the more serious self that found video games fun once in a while but couldn't feel engaged in them the same way as when he was a child. They were no longer as captivating, I had grown older and suspending my disbelief was not as easy as it used to be. I felt that this was what had turned most people off of gaming; and I wanted to bring the experience I had had with video games as a child to everyone. So with a new sense of purpose, I decided that I wanted to make video games that were serious intellectual challenges that engaged people's critical thinking, that would create decisions where people would have to inevitably look back and wonder about the consequences of what they did, that would make the player empathize with characters and be too engaged to move through its world as nothing more than a cold and calculating machine. I wanted to make an experience as universal as a movie or a book.

That put me on the path to today's startup, albeit in a very weird way. In junior year I applied for the honors program in computer science in order to work on a technology called Interactive Storytelling. This would be for creating games with stories that the player could change the course of through their decisions; and they would involve lifelike characters and difficult choices that would fit into complex narratives; there would be closure and catharsis, but also ambiguity. I was accepted, and soon enough I found myself overwhelmed. Luckily, a torrent of ideas came from a class in literary theory I had tentatively signed up for; which I'll talk more about soon.

To make things short, after two semesters of hard work, I cobbled together a tiny prototype and presented it to a general audience as well as the computer science department. The reviews were mixed, but I was happy nonetheless. After graduating college with Honors, I went on to work on it in my spare time before taking a hiatus and getting by with my day job as a paralegal. That is, until Nick convinced me to join forces with him to create a startup; and I couldn't say no.

Our two projects, when compared, shared a lot of theoretical qualities. But we decided to put our respective goals on hold when a new market opportunity came up. But I'm not here today to advertise our project, I wanted to talk about ideas!

So, what is "the stuff that dreams are made of?" Well, not dreams; we all know that those come from living in your parents' basement wondering when you're going to have a car—no, I meant ideas. Where do we get those?

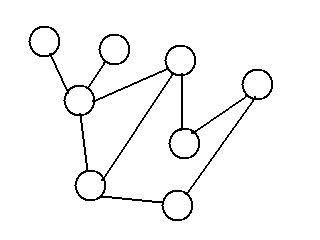

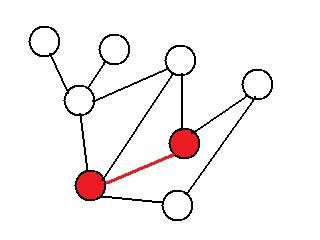

On the most fundamental level, ideas come from metaphors. That's right, every idea starts as a metaphor; a connection we see between two ideas because of their similarity. In your brain it looks a little bit like this two fold illustration I put up once before (you can praise me on my artistic talent in the comments section):

Your brain...

...getting an idea!

So, those two dots that lit up, what are they? Let's come up with a couple of ideas for concepts... okay, right, this isn't the lecture; just a blog post. Okay, so let's say that the one on top is the business cycle in economics; and let's say the other one is... partying! Because who doesn't love a good party, especially during an economic boom! Well, these two ideas are separated because, well, they don't really have anything in common.

Or do they? Let's think about this one. What do we do at parties? We sometimes have a few too many drinks. Did I say drinks? If any of you are religious or just generally more well behaved, let's just say extra-sugary fruit punch, the kind of stuff that will make you bounce off the walls before you get dizzy from all the running around and then crash. So, either way, you feel GREAT, and then you have a big stupid hangover; and we know that that's the price we pay for it, at least I hope we're all on the same page about that...

Now, what about an economic boom? Well, sometimes these booms are caused by a bubble. People get very enthusiastic about making a killing on the hot new asset, like say... big houses in the middle of suburbia. The price goes up 20%, then 50% then suddenly they're three times as expensive as they were two years ago. It can't just continue like this though; how many people are really going to pay a million dollars to move into a new house? Are you going to do that?

So what happens? The bubble bursts! And mind you, for those who are interested in the history of finance, this once happened in Holland over the price of tulips until... guess what? Somebody realized you can just grow them yourself. So suddenly, prices have to return to a level that actually makes sense; but with everyone having spent so much money on houses and others expecting to make a lot of money selling houses and all the people who were hired to build houses because they were so profitable, guess what happens to all of them? Not very pleasant to think; a lot of jobs, money and opportunities lost. The economy goes through a recession, readjusting to the sudden change that's happened.

But wait, there's a connection there. Something caused things to go up, to become super-active, and now that it's gone, everything comes crashing back down. And that's when we get the idea that maybe there's a connection; we realize that we need lows in order to correct the highs. So there you have it, now you know that binge drinking is a lot like a bad asset bubble.

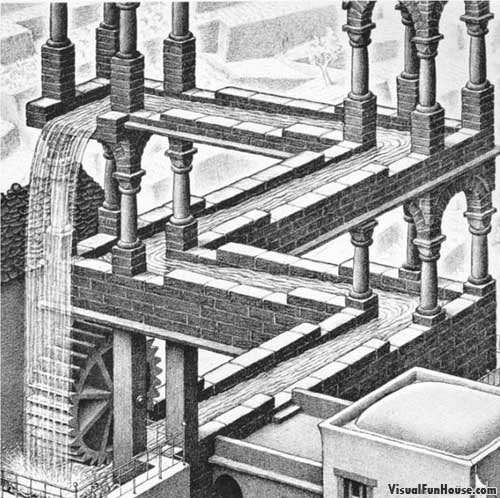

These are the metaphors that help us advance in technology and discover new customer needs. Don't believe me? Sim City, one of the most successful video games of all time, was inspired by the reproduction of cells.** The beautiful paintings by M.C. Escher are derived from mathematical concepts of recursion, topology and infinity (among many others.) More recent AI methodologies such as neural networks are inspired by biological systems rather than straight-up computations. Even our own idea of what goes on the world is shaped by far-away stories; we look at politics through the lens of historical narratives and fictional fables. People in the United States are always asking "Are we Rome?" Sometimes overtly, but also implicitly whenever they ask about the future of that country.

This happens even on a deeply neurological level. Our brains are ultimately pattern seeking devices and look to find patterns that match other patterns. We see it even in our everyday lives; have you ever seen a shadow and thought a dangerous person was waiting in a corner to attack you; only to find out that it was a garbage can? What about being startled at the sudden rustle of leaves in the middle of silence? This is pattern recognition at its most basic, and it finds its way even into our highest levels of thought.

But before dismissing it all as illogical and irrational, you should remember just how smarter you, or anybody reading this post, is than a computer. That's right, we're so good at this game because we're not computers! Embrace it, be childish and let yourself find connections wherever they are. But how do we go about doing that? Well? Two things.

First, read. Read on every subject you can and don't worry about working on your specialty. You have your entire life to learn the newest Java API or the latest updates to the .NET framework (this part is for tech entrepreneurs; ignore it if you're just a reader that happened to get this far in my rant.) In fact, you'll find that the demands of most situations are so specific and strange that you won't be able to figure out what you need to know in advance; so don't sweat it (but do study your fundamentals, they come in handy.) Pick up some fiction and poetry; or if you're the more serious kind (like myself for a while), bury your nose in some history, economics, psychology or anthropology. Be sure to learn some math too; big concepts that can help you understand things as systems. But you should all in all be looking to understand things in three categories: systems, ideas and the human condition. The third of those comes in fiction and poetry; stuff that really gets at what makes people tick, but that also requires doing another thing...

Live! There is no erudition without experience. Or put another way:

"Experience, though no authority Were in this world, would be enough for me" -The Wife of Bath

A lot led to where I am now; much of it serendipity and a slowly accumulated pile of books, but I'm most prepared for where I am now from the experiences I've had messing up, doing the wrong thing, doing all kinds of things that left me kicking myself in frustration. If it weren't for that, there would be nothing to hold all this together, just a bunch of gobbledygook.

Mistakes, there's an important word, and it reminds me of another question. What do we do with all these crazy ideas? Where is the empiricism? Ah, there's another idea I have to credit Chris Crawford with...

A T-Rex for ideas!

That's right, our ideas are just all vulnerable little sheep, and we need a T-Rex to rip all but the toughest of them apart. That means attacking your ideas from every angle. Forget all this about being nice to yourself, be a contrarian, drive yourself crazy; be like that kid who keeps asking endless questions about why he needs to take a bath! Not only that; go out and talk to people about your ideas. This is how Nick and I figured out what to do with our own product; we went out and talked to people. And as always, you don't always hear what you want to hear; but that's good, you're growing. Finally, go out there, and just make some mistakes. Not too big though, I don't want anyone coming back here with a missing limb!

As Kanye West once said; "that that don't kill me, can only make me stronger!" So go out there and get stronger, I'm rooting for all of you. And thank you for reading.

---------------------------------------------------------

*He has a wonderful website for those interested: http://www.erasmatazz.com

**See John Conway's The Game of Life

Saturday, August 13, 2011

Consciousness (In 3.5 Steps)

1) Existence and Consciousness are inseparable

From an epistemological standpoint, I don't see any way these can be safely separated. Therefore, I'm assuming that they can't. There are other reasons, which are still a mess in my head, but I am more or less working from what I consider the indisputable truth of subjective experience.

2) Experience is the friction of incomplete information

Why do we never seem to remember locking our door? Why do we remember surprises more than routine things? Because we failed to predict, thus signifying new information. In information theory, it's called information content and is measured by how many bits it takes to store; if a probability distribution has a single outcome that is 100% likely to occur, that probability distribution has no information content.

Another way of thinking about this is that information content is also called entropy*, which in physics is the irreversible disorder that arises from motion, which is very related to that other phenomenon we call friction.

Wolfgang Iser brilliantly applies this theory to the experience we have when reading books and just about everything else in life seems to follow. When are video games boring? When there's no challenge left. When do movies suck? When they're way too predictable. But thankfully, things are always at least a little bit different than we anticipated them.

3) You need identifiers to signify difference but you need difference to distinguish the identifiers from one another; this paradox creates a regress loop.

To put that in English, let's consider the colors of objects. If everything were the color red, we'd have no such thing as color. We notice things because there are differences. This ties back in to what I said about information content in the previous post; if there's a 100% probability of one particular outcome, then there is no information.

So if we want difference, we need some trait in which these things are different. But where does this trait come from? It would need to be distinguished from something else. Therefore, we're faced yet again with the same problem ad infinitum.

One last thing is needed then, to close this odd regress loop. Once we've done that, we essentially have a system in which we can continue to seek information in a cycle of anticipation and surprise but never reach the end:

While writing this, I realized that I was missing the answer to this part. I will try a basic sketch of this:

-There is some kind of self-reference that closes the loop of this problem with infinite levels of "difference"

-The result manifests itself as a paradox (or many of them--perhaps they all mean the same thing) such as Godel's Incompleteness Theorem or the Time Dilation paradox.

-This creates a dog-chasing-its-tail effects in which a recursive pattern creates a fractal of uncountable codes--the friction of these being what we know to be reality.

But feel free to take a stab at the last part and let me know what you think...

I should note that by the time I finish Godel/Escher/Bach, it may be the case that Hofstadter beat me to the punch; in fact, he says that his thesis is that Meaningless Symbols Acquire Meaning Through Self-Reference, but so far it has proven to simply be the connective link that I have been missing between the three steps that I outlined above; I just hadn't put them together the right way, but they were all there a year ago.

------------------------------------------------------

*Forgive me if I've abused the poor term; I'm not a physicist.

Friday, August 5, 2011

Wednesday, August 3, 2011

A definition of narrative?

My structural definition of a narrative:

A collection of events* that makes sense of itself without the use of axioms.

*Was hesitant about using the term "event", but I think that it's more precise than saying "signs"--there's a self similarity here I think; the idea of an event is itself inscribed in narrative.

Thoughts?

Thursday, June 23, 2011

...I cannot find the book I found it in. I will find it later, sorry. May be in a book I left downstairs.

That Old Humanities Argument

As an English major in college, I had to struggle with this question myself; I was asked by other people and even had to deal with the prompt in a literary theory class (which I utterly failed at doing.) It is worth noting that a lot of my work in literary theory has been applicable to what I'm engineering now, but that's besides the point to me because I know that it wasn't all that this was about. I certainly see literature as an important metaphysical experiment for philosophers, but I don't know how I feel about philosophers either.

But around a year ago I had read about Stanley Fish's book Save the World On Your Own Time, which apparently* argued that trying to find some political or economic justification for the humanities denigrates it by denying the idea that it may just be good in of itself (does everything really come down to money and survival?)

While I was taking a break today that line of thinking crossed over with all the time that I've spent thinking about Edmund Burke and Nassim Taleb and it dawned on me that I had been missing the obvious for years; that perhaps the importance of literary criticism is in the fact that despite having no explicit justification for it, we still continue to read, teach, analyze and deconstruct stories; value a supposedly "arbitrary" literary canon, ask questions about things that never happened and give interpretations in the absence of right or wrong answers.

To me, asking why we value literature, spend so much time teaching it to students and even have tenured academics who spend their whole life studying it is like asking why we have religion or inauguration ceremonies or act hold doors open for other people. At this point, it's tradition and part of a deeper logic that we can't ever presume to understand--a point made tirelessly by Edmund Burke in the wake of a disastrous French revolution based on simple top-down models. Simply put, I don't think that the world would be better off if we stopped holding doors for other people and I don't think that we'd be better off not studying literature.

On a more concrete note, I think it is possible to glean the value of literary criticism and it is related to the importance of things beyond economic concerns. We live in a world richly populated by cultural phenomena. Being part of that world means understanding our cultural heritage and our shared idea of what it is to be human (cliche, I know, but isn't it true?) Would you refrain from teaching your kid table manners or how to talk to elders?

Whether it's through high school English, Hebrew School or wrestling in the grass with your classmates, we all have and all need rites of passage. Part of the humanities is spiritual training, the rest is something else.

----------------------------------------------------------------------------------------------------------

*No, I haven't read it, just making that clear. A second-hand summary did in fact raise some interesting points for me.

Monday, June 13, 2011

Childhood

Friday, June 3, 2011

Thursday, May 5, 2011

The Confirmation Bias

Sunday, May 1, 2011

The Triad

Saturday, April 30, 2011

Minimal Interfaces/Information

Tuesday, March 15, 2011

On Stories and Trying to Figure Them Out

The hodgepodge of theories, concepts and examples with which I’ve explained the problems of interactive storytelling has not been one that lends itself to being quickly wrapped up in a concluding page, let alone five. It would perhaps be a more fitting note to end on a story; to further interconnect the many concepts explained, and if we’re lucky, see a new idea emerge. After all, if there is one thing us academics can agree on, it’s that he who of those delights can judge, and spare to interpose them oft, is not unwise.

Only a year ago, I had been recently accepted into the Honors program in computer science to begin a project whose name was simply “Interactive Storytelling.” My proposal, although over ten pages, contained little of what was needed to understand anything of the problem at hand; my understanding of narrative was confined to narrow formalist ideas of plot and a handful of scattered critical concepts.

By chance, I ended up in a class on literary theory after not making it into a different English class. Combined with the various books with which I supplemented my project, I found myself understanding narrative in ways that finally bore fruit. Combining my knowledge of mathematics and literary theory, I began to see stories themselves in a different way, abstracting them to structures juxtaposed in varying dimensions.

Nonetheless, as every structuralist knows, rules are meant to be broken and as I found myself writing this paper, a tension between the easily imagined existence of signs within systems and the inability to clearly explain their relationships to one another within some space kept me spending an entire day wondering what I was really saying, how I could find that balance between saying what needed to be said and leaving the rest open. I saw then what Stanley Fish talked about in another part of Interpreting the Variorum, a moment of tension in the process of reading.

That tension has seemed to exemplify the work I have done on interactive storytelling in the past year more than anything else; a constant tension between the axiomatic and the narrative. Mathematics is closed; there is always a clear path to understanding it. But only narratives endow us with any kind of meaning; even the great Paul Erdös would always say after proving an exceptional mathematical theorem “it’s in the book!” I’ve been standing on the edge throughout the entire year; interpreting stories with technology, looking over as I make another carefully thought out conjecture.

Yet there has been a remarkable sense of fulfillment from it all. With each day, my own project and my own writing on the subject become increasingly robust and meaningful, even as I come to terms with this lack of understanding. Every day I am capturing narratives more, not less, even as they appear more elusive with each day. Perhaps more than anything I’m gaining satisfaction from this very lack of reconciliation. After all, this tension is the mark upon us which narrative leaves; narrative exists in the friction that comes from not quite understanding, from the vulnerability of any structure we create, no matter how simple. And if such tension is what defines a narrative, then this project, this critical technical practice, is one as well.

Tuesday, March 8, 2011

Lies and the Lying Semioticians That Tell Them

Friday, March 4, 2011

Sunday, February 27, 2011

Men In Black

Saturday, February 26, 2011

EDIT: I just realized that the only way I can articulate my defense of religion is with these little vignettes. I have an idea of why, but I don't want to get into a long essay about it (talk to me—or leave a comment if you're interested in that sort of thing.)

Friday, February 25, 2011

The Garden of Platonic Forms

Literature has no platonic forms, invalidating the underlying assumption since Aristotle that literature is a way to bring us closer to Platonic forms (Plato believed that poetry and drama was "thrice removed from nature", meaning that it was an imitation of an imitation; but Aristotle believed in the power of poetry/drama to bypass the limitations of Earthly manifestations.)

Structuralism was the most rigorous* way of doing this, but eventually saw its own limits; thus becoming a study of this very cycle of interpreting and then finding new meaning by figuring out how the interpretation contradicted itself or simply didn't apply. Perhaps that's why I have as hard a time as the French understanding any real difference between structuralism and post-structuralism.

--------------------------------------------

*Please take "rigorous with a grain of salt.